How to create Amazon EKS cluster using Terraform

Create AWS EKS Cluster using Terraform

What is Amazon EKS?

Amazon EKS is a fully managed container orchestration service. EKS allows you to quickly deploy a production-ready Kubernetes cluster in AWS, and deploy and manage containerized applications more easily with a fully managed Kubernetes service. Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications.

EKS takes care of the master node/control plane. We need to create worker nodes.

You can create an EKS cluster with the following node types:

Managed nodes - Linux - Amazon EC2 instances

Fargate - Serverless

We will learn how to create an EKS cluster based on Managed nodes (EC2 instances).

EKS cluster can be created in the following different ways:

1. AWS console

2. AWS CLI

3. eksctl command

4. using Terraform

We will create EKS cluster nodes using Terraform.

Pre-requisites:

Install Terraform

Install AWS CLI

Install kubectl – A command line tool for working with Kubernetes clusters.

Create an IAM role with an AdministratorAccess policy or add AWS root keys

Make sure you have already VPC created with at least two subnets.

Create IAM Role with Administrator Access:

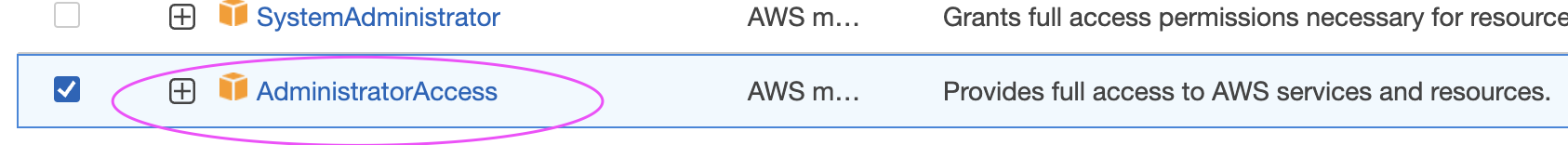

You need to create an IAM role with the AdministratorAccess policy.

Go to the AWS console, IAM, and click on Roles. create a role

Select AWS services, Click EC2, Click on Next permissions

Now search for AdministratorAccess policy and click

Skip on creating a tag.

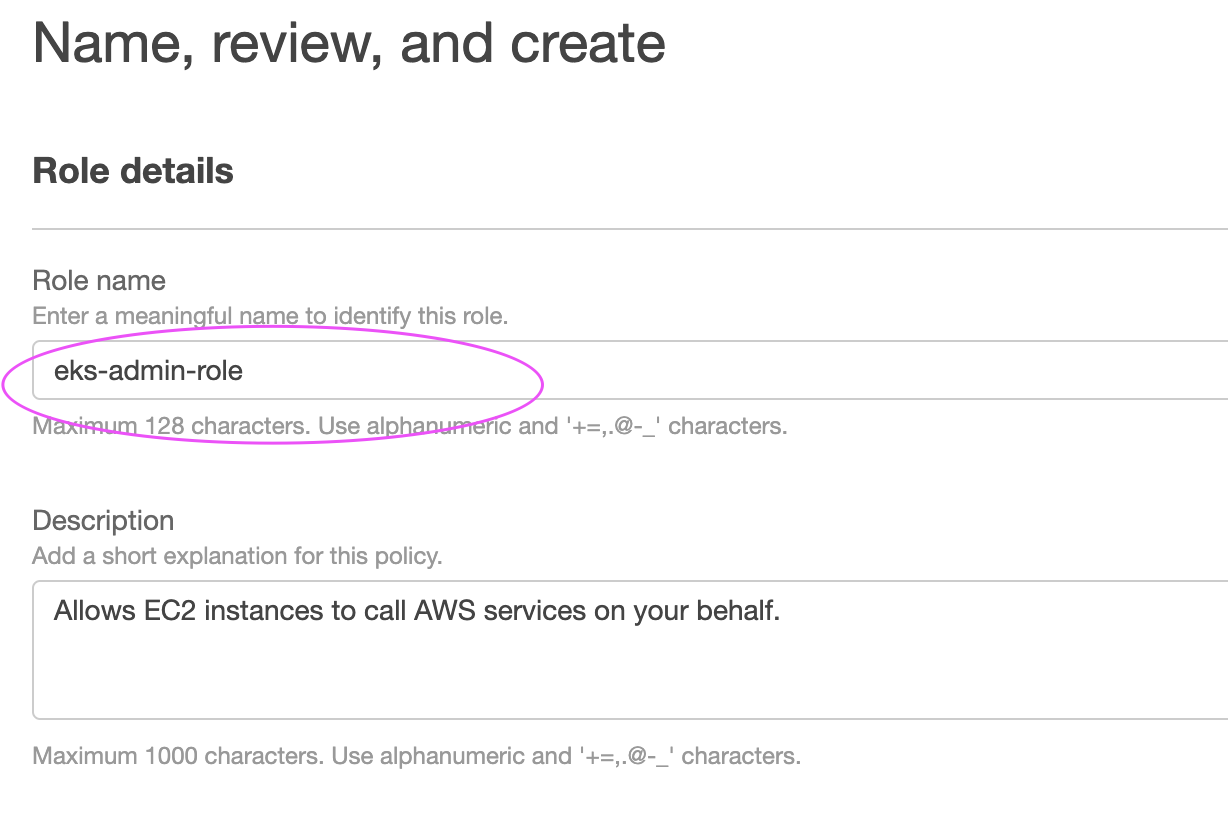

Now give a role name and create it.

Assign the role to the EC2 instance:

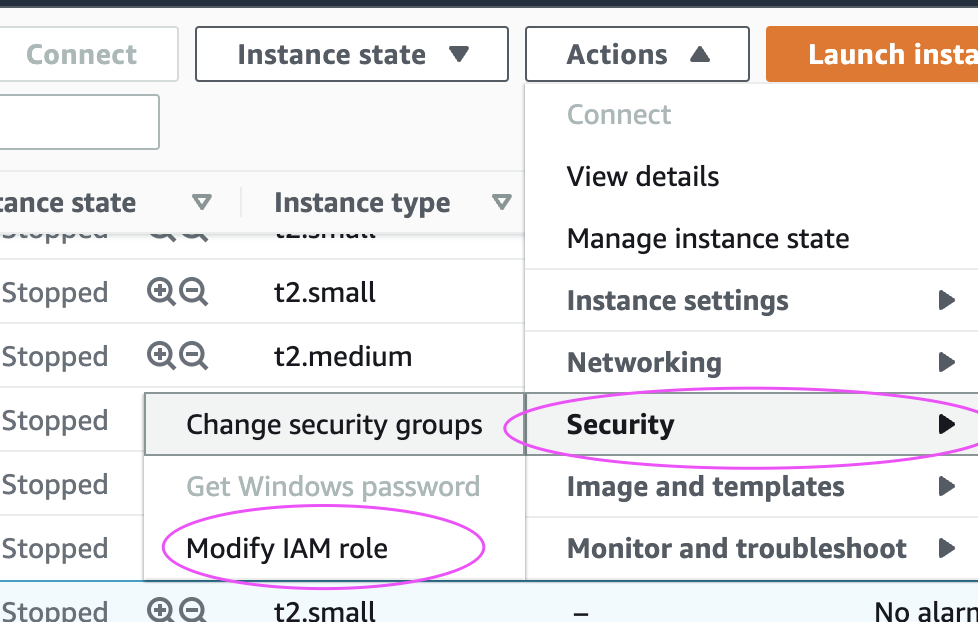

Go to the AWS console, click on EC2, select EC2 instance, and Choose Security.

Click on Modify IAM Role

Choose the role you have created from the dropdown.

Select the role and click on Apply.

Create Terraform files

sudo vi variables.tf

variable "subnet_id_1" {

type = string

default = "subnet-ec90408a"

}

variable "subnet_id_2" {

type = string

default = "subnet-0a911b04"

}

variable "cluster_name" {

type = string

default = "my-eks-cluster"

}

sudo vi main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

}

}

}

resource "aws_iam_role" "eks-iam-role" {

name = "devops-eks-iam-role"

path = "/"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

resource "aws_iam_role_policy_attachment" "AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks-iam-role.name

}

resource "aws_iam_role_policy_attachment" "AmazonEC2ContainerRegistryReadOnly-EKS" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.eks-iam-role.name

}

resource "aws_eks_cluster" "my-eks" {

name = var.cluster_name

role_arn = aws_iam_role.eks-iam-role.arn

vpc_config {

subnet_ids = [var.subnet_id_1, var.subnet_id_2]

}

depends_on = [

aws_iam_role.eks-iam-role,

]

}

resource "aws_iam_role" "workernodes" {

name = "eks-node-group-example"

assume_role_policy = jsonencode({

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

}]

Version = "2012-10-17"

})

}

resource "aws_iam_role_policy_attachment" "AmazonEKSWorkerNodePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.workernodes.name

}

resource "aws_iam_role_policy_attachment" "AmazonEKS_CNI_Policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.workernodes.name

}

resource "aws_iam_role_policy_attachment" "EC2InstanceProfileForImageBuilderECRContainerBuilds" {

policy_arn = "arn:aws:iam::aws:policy/EC2InstanceProfileForImageBuilderECRContainerBuilds"

role = aws_iam_role.workernodes.name

}

resource "aws_iam_role_policy_attachment" "AmazonEC2ContainerRegistryReadOnly" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.workernodes.name

}

resource "aws_eks_node_group" "worker-node-group" {

cluster_name = aws_eks_cluster.my-eks.name

node_group_name = "my-workernodes"

node_role_arn = aws_iam_role.workernodes.arn

subnet_ids = [var.subnet_id_1, var.subnet_id_2]

instance_types = ["t2.medium"]

scaling_config {

desired_size = 2

max_size = 2

min_size = 1

}

depends_on = [

aws_iam_role_policy_attachment.AmazonEKSWorkerNodePolicy,

aws_iam_role_policy_attachment.AmazonEKS_CNI_Policy,

aws_iam_role_policy_attachment.AmazonEC2ContainerRegistryReadOnly,

]

}

Create EKS Cluster with two worker nodes using Terraform

Now execute the below command:

terraform init

This will initialize terraform working directory.

Execute the below command:

terraform plan

The above command will show how many resources will be added.

Plan: 10 to add, 0 to change, 0 to destroy.

Now let's create the EKS cluster

terraform apply

This will create 10 resources.

Update Kube config

Update the Kube config by entering the below command:

aws eks update-kubeconfig --name my-eks-cluster --region us-east-1

kubeconfig file is updated under /home/ubuntu/.kube folder.

you can view the kubeconfig file by entering the below command:

cat /home/ubuntu/.kube/config

Connect to EKS cluster using kubectl commands

To view the list of worker nodes as part of EKS cluster.

kubectl get nodes

Deploy Nginx on a Kubernetes Cluster:

Let us run some apps to make sure they are deployed to the Kubernetes cluster.

The below command will create deployment:

kubectl create deployment nginx --image=nginx

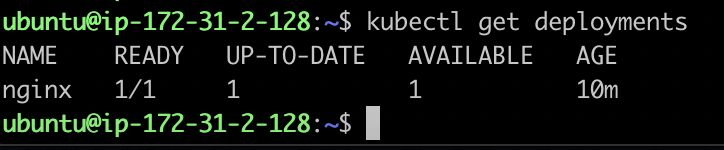

View Deployments:

kubectl get deployments

Delete EKS Cluster

terraform destroy

the above command should delete the EKS cluster in AWS, it might take a few mins to clean up the cluster.

OR

you can also delete the cluster under AWS console --> Elastic Kubernetes Service --> Clusters

Click on Delete cluster